Resource Guide: Teaching in the Age of AI

By Amanda Leary, Steve Varela, Roberto Casarez, and Alex Ambrose

Since ChatGPT burst onto the scene in December 2022, higher education has been buzzing with talk of, quite simply, what to do about it. Generative artificial intelligence tools—large language models such as ChatGPT, image generators like Midjourney and Dall-E, presentation generators like Decktopus and SlidesAI, as well as a whole host of AI assistants and disciplinary tools—are changing the landscape of education, and the ground is shifting all the time as new technologies continue to be developed.

Higher education is no stranger to disruptive technologies; it wasn’t too long ago that we were reckoning with cell phones, laptops, and calculators, and adapting our pedagogy to meet these emerging trends. Now, we are preparing for the next wave: AI isn’t going anywhere. As we head into the academic year, instructors are challenged with how to adapt their teaching to this new reality. More importantly, instructors – and higher education as a whole – have the opportunity to think deeply about what it means to educate students who will be living in a world filled with AI tools.

This resource guide explains a bit about what generative AI is and how it works; outlines potential uses and approaches to AI use in the classroom, and provides strategies for effective teaching and learning in the age of AI. We will update this guide regularly to respond to the ever-evolving field of AI and its implications for education.

Table of Contents

Overview and Definitions

What is generative AI?

Artificial Intelligence (AI), in its simplest definition, is any technology/machine that can perform complex tasks that are typically associated with human intelligence. These tasks can include problem solving, planning, reasoning, and decision making. As the field of AI continues to grow, the terms used and the definitions associated with the terms continue to evolve. The three main types of AI presented in this overview are Artificial Narrow Intelligence, Artificial General Intelligence, and Generative Artificial Intelligence.

Artificial Narrow Intelligence (ANI), which may also be referred to as weak AI or narrow AI, performs specific, but often complicated, tasks such as analyzing large data sets, making predictions, or identifying patterns.

Artificial General Intelligence (AGI) is the ability for technologies/machines to demonstrate broad human-level intelligence. This includes the ability to learn and apply its intelligence to solve problems.

Generative Artificial Intelligence (GenAI) is a set of algorithms that can create/generate seemingly new, realistic content — such as text, images, and audio — from a set of training data.

GenAI systems, such as ChatGPT and Midjourney, have quickly become a topic of intense conversation as they are challenging long-standing practices in education, particularly in the assessment of knowledge. Additionally, the proliferation of GenAI systems have called into question the need for certain job roles, such as in the creation of articles in journalism and the need for programmers/coders in computer science.

What can and can't generative AI do?

GenAI systems typically use deep learning techniques and massively large data sets to understand, summarize, generate, and predict new content. It synthesizes seemingly new and realistic outputs based on the data it has been trained on.

GenAI systems cannot think, do, or learn as humans do, even though they may seem that way. Additionally, these systems cannot evaluate the accuracy or quality of the data they have learned from or the output they provide. They may not provide accurate references or understand what they are explaining or the process by which it arrived at the output. They are not content experts, and cannot be relied on as such.

What is a “prompt?”

Generative AI platforms generate in response to user input, or prompts. Prompts can include words, phrases, questions, or keywords that users enter to signal the AI to generate a response based on those factors–the better the prompt, the better the results.

A good prompt has 4 key elements: Persona, Task, Requirements and Instructions.

- Persona: Prompts starting with “act as…” or “pretend to be…” will provide responses similar to that of the role which you provide. Setting a specific role for a given prompt increases the likelihood of more accurate information, when done appropriately.

- Task: Be clear about what you want an answer to, what you want the AI generator to do, find, analyze, etc.

- Requirements: Provide as much information as possible to reduce assumptions the generator may make.

- Instructions: Inform the AI generator how to complete the task.

Example: You are an expert computer scientist who has been asked to explain the relationship between sorting and searching techniques. Provide a paragraph comparing and contrasting these two techniques. Be concise and use an academic tone.

You can use this as a starting point and utilize follow up directions to refine the result.

What are the Potential Uses for Generative AI in the Classroom?

There are many generative AI tools available (both free and paid) to faculty and students, but there are three main types most commonly utilized: natural language processing tools (Ex. Chat GPT, Bing, Google Bard), text to image generators (DALL-E, Adobe’s Firefly, Midjourney) and language translators (DeepL, Reverso). These AI tools offer many benefits to teaching and learning. Here are just a few examples:

For students

Improved understanding of complex course materials: Because these systems are typically excellent at summarizing inputted text, students can use generative AI to help them synthesize, summarize, and explain complex reading materials.

Promoting creativity and idea brainstorming: Students can use generative AI tools to inspire and encourage creativity by helping them in their writing, generating topic ideas or writing prompts and inspiring them to explore other possibilities they may not have thought about before. They can then receive additional support creating outlines for these ideas.

Personalized learning: Students can also use generative AI to develop personalized learning experiences for themselves. They can create their own study guides, sample test questions or problems, and even have a virtual tutor by simulating conversations and receiving instant feedback.

Improved accessibility: Generative AI has the potential to improve accessibility for students with communication impairments, low literacy levels, and those who speak English as a second language. It can provide real-time responses and quick feedback, making learning more accessible and customized.

Check it out: This Canvas site from the University of Sydney—built by students for students—goes more in-depth about how students are using AI to complement their classroom experiences.

For faculty

Efficient content creation: Faculty can use generative AI to streamline the creation of course materials. From creating a syllabus, writing short mini-lectures, creating test questions and even constructing grading rubrics, it can save a lot of time and effort for faculty. Generative AI can also generate images to enhance course content in Canvas modules or lecture slides for class discussions–essentially aiding faculty in creating engaging and customized learning materials.

Assessment and feedback: Faculty can use generative AI to assess and provide constructive feedback on composition, style, or technical aspects of student work.

Encourage digital literacy: Too often students use technology without giving much thought to the validity, reliability, and quality of the information they consume. Faculty can use generative AI content as examples to help students to scrutinize and evaluate information and images that may not be accurate and/or misleading.

It’s important to note that while generative AI offers numerous benefits, there are also considerations and challenges that faculty should be proactive about, such as protecting data privacy, addressing biases in AI-generated content, maintaining a balance between technology and human interaction in the learning process, and guiding students to use these tools ethically. In addition, we recommend that faculty continue to learn about these tools and use them in conjunction with their expertise and pedagogical strategies. If you have any questions about using AI in the classroom or would like a demonstration, contact the Teaching and Learning Technologies team.

Check it out: Teaching Tools just launched an AI tool called Brainstorm to help instructors come up with ideas for learning objectives, case studies, and discussion questions. Try it out and schedule a consultation with us to see how you could incorporate it into your teaching.

Establishing AI Policies for Your Course

Let’s face it: our students are using generative AI tools (whether we think they should or not!). How we respond to that use in our classrooms is up to instructors on an individual basis and will vary based on course goals and disciplinary norms. We recommend taking some time to think about what your approach to students using AI will be. When and how will students be allowed to use AI? Are you asking students to use an AI tool to complete a particular assignment? Should they cite it? Why? Outline your policy on AI in a syllabus statement that includes:

- your expectations for student work as it relates to academic integrity;

- authorized and unauthorized use of AI;

- appropriate acknowledgement of AI use;

- consequences for violating the policy.

Policies on emerging technologies are also good places to get students’ opinions—how are they already using AI in the classroom and their lives, and why? How do they understand the role of AI in their own learning? Using students’ voices to shape your policy on AI communicates respect for their agency and experiences, and can lead to greater overall compliance with the policy.

What are my options?

Prohibit in all contexts.

Depending on your disciplinary context, it may be appropriate to disallow generative AI tools from idea to finished product. Be mindful, however, that such policies can be difficult to enforce and will need a clear explanation for how suspected AI use will be handled. AI detectors are generally unreliable and come with their own set of ethical limitations—for example, Stanford researchers have found that detectors, which many instructors rely on to detect academic misconduct, are biased against non-native English speakers. AI detectors often won’t tell the whole story, so have a plan for how you will approach assessing student work.

Sample language:

Students are not allowed to use advanced automated tools (artificial intelligence or machine learning tools such as ChatGPT or Dall-E 2) on assignments in this course. Each student is expected to complete each assignment without substantive assistance from others, including automated tools.

Use only with prior permission.

If you are experimenting with generative AI assignments, clearly distinguishing between when students are and are not allowed to use AI will help eliminate confusion and make it easier for students to complete all course assignments without issue. For such assignments, outline what the authorized uses are—brainstorming, fact checking, editing, etc.—and provide examples for how to do those tasks with AI.

Sample language:

Students are allowed to use advanced automated tools (artificial intelligence or machine learning tools such as ChatGPT or Dall-E 2) on assignments in this course if instructor permission is obtained in advance. Unless given permission to use those tools, each student is expected to complete each assignment without substantive assistance from others, including automated tools.

Use only with acknowledgement.

Especially when accompanied by instruction in how to use AI tools effectively, you may find that using AI can actually improve student learning. Adopting an approach that allows students to use AI-generated content while being able to distinguish between their work and the tool’s through proper citation enables instructors to see and assess students’ learning.

Sample language:

Students are allowed to use advanced automated tools (artificial intelligence or machine learning tools such as ChatGPT or Dall-E 2) on assignments in this course if that use is properly documented and credited. For example, text generated using ChatGPT-3 should include a citation such as: “Chat-GPT-3. (YYYY, Month DD of query). “Text of your query.” Generated using OpenAI. https://chat.openai.com/” Material generated using other tools should follow a similar citation convention.

Use is freely permitted with no acknowledgement.

It’s the wild, wild, west and students are free to use AI however they’d like. This approach won’t work for every course, but in some disciplines, using AI to complete tasks is the norm. Even if you take this approach, however, it’s important to build AI literacy into your course. You might want to discuss the ethical considerations and learning implications of using AI to aid their work, and include other modes of assessment to ensure students are meeting your learning goals.

Sample language:

Students are allowed to use advanced automated tools (artificial intelligence or machine learning tools such as ChatGPT or Dall-E 2) on assignments in this course; no special documentation or citation is required

Whatever you decide, be transparent about your approach with your students to:

- Create clear pathways to success

- Mitigate disputes

- Improve understanding of teaching and learning processes

Check it out: You can find a repository of sample syllabus statements from instructors in a range of disciplinary contexts here.

Strategies for Effective Teaching in the Age of AI

AI’s opportunities for teaching and learning are as vast as the concerns it raises about assessing it. Whether or not you buy into the “homework apocalypse” rhetoric, AI is challenging us as instructors to refresh our pedagogy and provide new ways for students to demonstrate their skills, knowledge, and abilities. You can use the strategies below to create more effective learning experiences, both AI-enabled and more traditional.

Promote metacognition.

Regardless of whether students are using AI to complete assignments, incorporating tasks that ask students to reflect on and analyze their own learning creates an opportunity for them to demonstrate mastery and enhances overall success. Helping students develop a critical awareness of their learning improves their ability to transfer this self-knowledge to other domains.

You might ask students to track when they used AI and why. Did it help them to clarify a point that they didn’t understand? How did using the tool further or complicate their understanding of a topic? Did using AI help them meet the goals of the assignment?

Get specific.

There is no such thing as an “AI-proof” assignment. With enough elbow grease, AI can be leveraged to accomplish almost any task. Though you may not be able to prevent students from using AI in unauthorized ways, you can design assignments that they actually want to do by making assignments authentic to their experience and relevant to their lives.

- Ask students to connect assignments to specific readings from class, or use a point raised in discussion to defend or critique a stance.

- Incorporate a reflective component that asks students to explain how the assignment relates to their personal beliefs or professional aspirations.

- Explore current or local problems that AI doesn’t have access to by using examples that are local to the Notre Dame community or from recent months. Just be mindful that while ChatGPT 3.5 isn’t connected to the internet, Bing Chat and Google Bard are, and have access to real-time information, with mixed results. For example, when we asked Google Bard to provide us with a list of citations for scholarly articles about women mystics in the Middle Ages, none of the results were written this century and many of them were books, not articles. Try running your assignments through each platform to see how the AI responds before giving them to students.

Emphasize process over product.

Scaffolding assignments into smaller checkpoints can deter—though not prevent—unauthorized use of generative AI. If the assignment is AI-enabled, working in steps provides the opportunity to guide students through using AI effectively and ethically. This allows you to give feedback throughout the process and see how students are developing ideas and changing their thinking over time. It also invites metacognition; a final portfolio, for example, lets students create a narrative about the work they have completed from start to finish and explain their choices.

Other ways to demonstrate process:

- Outlines or proposals

- Annotated bibliographies

- Drafts and feedback

You might also consider having students annotate class readings, either independently or collaboratively using Perusall. Rather than relying on generative AI for summaries, annotations help students process and understand complex readings and improve literacy.

Check it out: Derek Bruff, author of Intentional Tech: Principles to Guide the Use of Educational Technology in College Teaching (2019) has several blog posts chronicling his efforts to redesign assignments to accommodate AI-based disruption. His posts on reading responses and essays are full of great tips. Schedule a consultation with us to talk about redesigning one of your own assignments.

Flip the classroom.

Traditional classrooms are structured so that “first exposure” to new concepts and topics happens in class, usually through a lecture. Students then practice and apply their knowledge by completing homework assignments outside of class. Flipping the classroom, well, flips this structure. Instead, students are responsible for introducing themselves to the material through pre-recorded lectures or readings, and complete checks for understanding that prepare students to participate in class. Class time is spent applying what they’ve learned and receiving feedback, usually through active learning.

Examples of a flipped approach:

- Have students annotate readings and come prepared to discuss the main arguments and draw connections between them

- Students watch a pre-recorded video on a concept and complete a pre-class assessment, which allows you to focus class activities on addressing gaps in knowledge

- Students might work together on a problem set or case study, or work in teams to solve a problem

- Map or draw a concept, then explain and compare their drawing with a partner

In-class practice limits students’ ability to use AI tools in unauthorized ways, while also providing an opportunity for students and instructors to experiment with how AI can be used to work on disciplinary problems.

Check for alignment.

The reality that AI can perform lower-level or routine tasks efficiently and accurately challenges us to rethink the purpose of our assignments and what kind of learning we are trying to get our students to demonstrate—in other words, are our learning goals actually what we want for our students, and are our current assignments helping them get there? Learning goals that emphasize the process of learning rather than the product of learning help us create assignments that ask students to engage in more complex and higher-order thinking. Inara Scott, a professor at Oregon State University, developed the “less content, more application” challenge to help move students away from content-based “what” knowledge and toward skills-based “when, why, and how” practice.

This is not to say that students don’t need to know the fundamentals—they certainly do! Be transparent with students about why you are asking them to complete assignments and tasks that could easily be done with AI. Emphasize the importance of the skills they are developing and how they contribute to the value of their learning.

You may want to consider alternative or multimodal assessments that allow students to demonstrate their knowledge of fundamental concepts in non-traditional ways to encourage a more relevant and authentic engagement with the content.

Cultivate inclusivity.

In Cheating Lessons: Learning from Academic Dishonesty (2013), James Lang identifies four conditions that underlie academically dishonest behaviors: 1) an emphasis on performance; 2) infrequent high-stakes assessments; 3) low expectations of success; and 4) extrinsic motivation alone, such as grades (35).

When we create inclusive and supportive learning environments that:

- Cultivate belonging for all students,

- Affirm students’ identities, experiences, and abilities,

- Express confidence in students’ ability to learn and succeed,

- Provide multiple ways for students to demonstrate their learning,

- Are relevant to students’ lives,

we take steps to foster intrinsic motivation in students—the desire to complete a task for its own sake, either out of interest or enjoyment. The strategies outlined above that connect assignments to students’ lives and experiences contribute to belonging and motivation. Likewise, metacognitive assignments hold students responsible and accountable for their learning. Assignments that allow students to demonstrate their learning in multiple ways provide students with the opportunity to be creative, agentic, and leverage their prior skills and abilities. In inclusive classrooms, students may also feel more comfortable seeking help or further guidance when they don’t understand a concept, mitigating the recourse to academically dishonest behavior. Finally, reasonably flexible grading and late work policies can lower the pressure and anxiety around assessments.

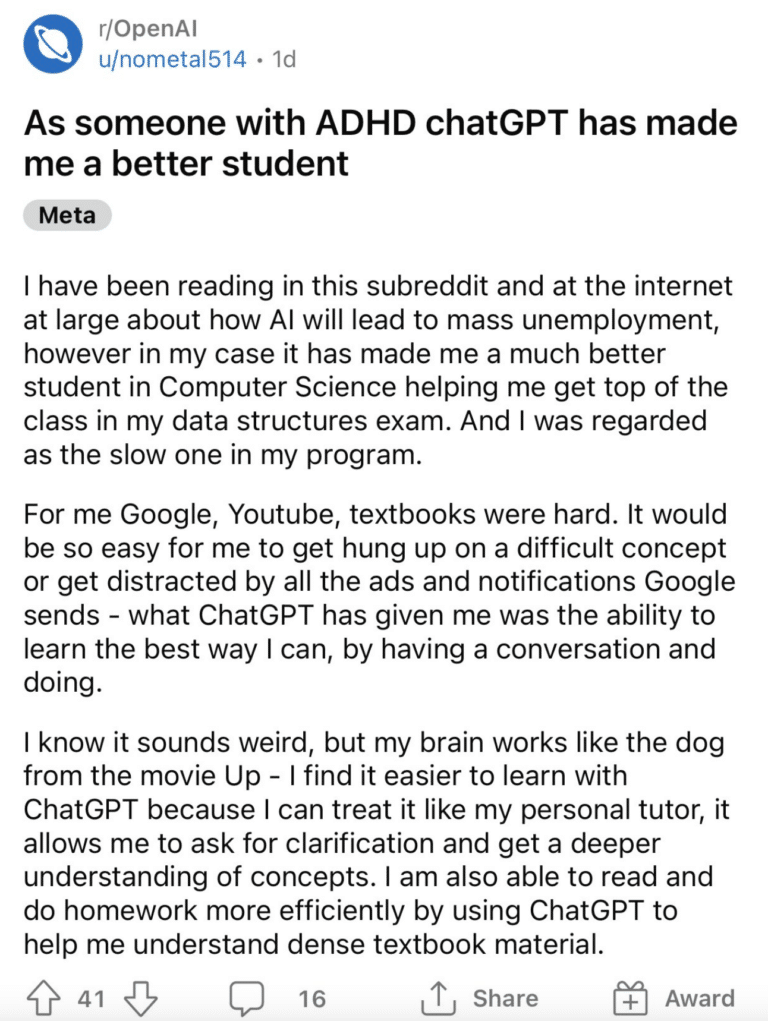

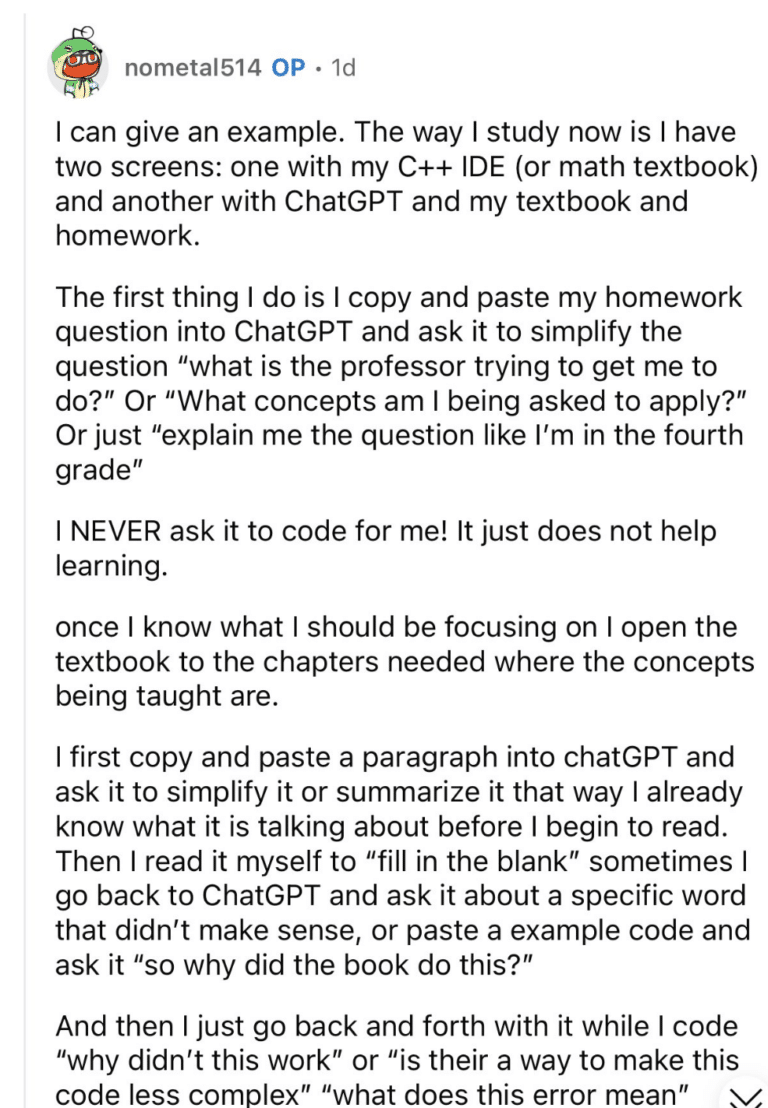

However, AI can also be an asset for more equitable learning. Generative tools like ChatGPT can, with proper checks, help students break down complex topics, expose students to different perspectives, brainstorm ideas, and focus. Maggie Melo, a professor at the University of North Carolina-Chapel Hill, has written about using ChatGPT as someone with ADHD. And students are using it to help them decode assignments:

Screenshots from Reddit.

Check it out: Ethan and Lilach Mollick have written extensively about integrating AI technologies into evidence-based teaching practices. “Using AI to Implement Effective Teaching Strategies in Classrooms” is a great place to start.

Building Critical AI Literacy

Learning to use AI responsibly will be a key skill for students moving forward. Incorporating it into the classroom provides students with the opportunity to practice with AI and develop critical thinking skills, such as transfer and evaluation. The classroom is also an important space for helping students develop critical AI literacy—an understanding of AI’s principles, limitations, ethical considerations, and social impact—in order to use it responsibly. The best way to do that? Encourage its use, but expose its limitations.

Accuracy: AI chatbots—even those with a real-time connection to the internet—get things wrong. This is due, in part, to the predictive algorithm it uses to analyze its dataset and respond to a prompt. But generative AI can’t think, and can’t distinguish between fact and fiction, right and wrong. Sometimes, it just makes things up. Part of developing critical AI literacy is adopting a hermeneutic of suspicion: don’t believe everything you read. Have students fact check generative AI’s output to demonstrate its inaccuracies.

Bias: The output generated by AI is only as good as the data it was trained on. This means that platforms like ChatGPT and Stable Diffusion reproduce the biases, stereotypes, and misinformation present in their corpora. And because AI cannot distinguish between right and wrong, it may not recognize its own bias, either. Worryingly, new research shows that these biases are extending to users of generative AI. Have students try prompting a chatbot to provide two sides of an argument, or ask it explicitly to point out where its output may reflect bias.

Intellectual Property and Data Privacy: There is no standard data management position across AI systems. Prior to March 1, 2023, anything submitted to OpenAI’s platforms was incorporated into the model’s training data. Google Bard stores chatbot activity for 18 months by default and conversations undergo human review. It is important for you and students to be aware of how your data will be stored and used. AI also has a serious intellectual property problem. When AI output infringes upon the intellectual property of artists and writers by mimicking their style or technique to produce “derivative” works, there are few legal protections in place for the original creators. Equally unclear is who owns generative AI output. It is important for instructors and students alike to be mindful of the minefield of privacy concerns that come along with using AI.

Bearing these limitations in mind, there are ways to leverage generative AI to help students develop critical AI literacy and engage students in higher-order thinking and deep learning.

Use AI to help students perform disciplinary ways of thinking. You can have students annotate and edit output from generative AI tools to identify fallacies or gaps in its subject-area expertise. Try re-running the same prompt multiple times and have students look for patterns. Then, show them how an expert in the field might craft a response and give students the opportunity to practice writing, comparing their attempts to the AI output. Or, have them edit the output to demonstrate more disciplinary norms and include more specific content, examples, or structures. You can do these activities with text or image generators.

You might also have students test the limits of the AI’s subject-area expertise by fact checking the output. Have students prompt a chatbot to respond as a notable figure from your discipline (or even just as an expert) to see what it does and does not know.

Check it out: You can find other assignments and activities that use generative AI and teach AI literacy here.